What Is AI Governance: Managing Artificial Intelligence Systems

Artificial Intelligence (AI) is changing how we live, work, and interact. AI is rapidly integrated into our daily lives and various industries, from virtual assistants to self-driving cars. However, with these advancements come questions about how we can ensure that AI systems are used safely, ethically, and responsibly. This is where AI governance comes into play.

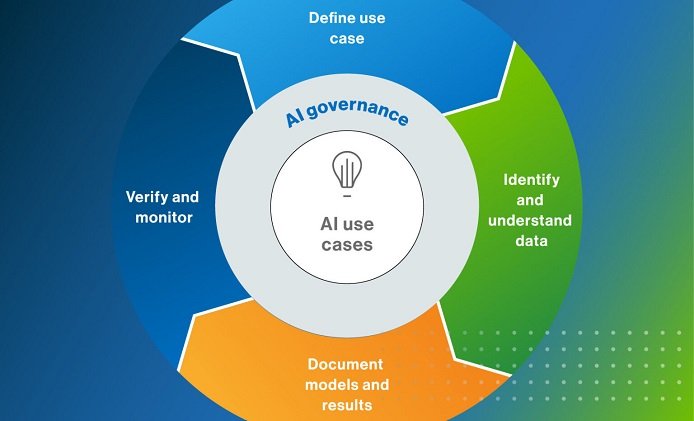

AI governance refers to the guidelines, rules, and structures that help manage AI systems in a way that balances innovation with ethical standards, accountability, and risk management. I will discuss AI governance, why it’s so important, our challenges, and how we can build effective systems to manage AI responsibly.

What Is AI Governance?

At its core, AI governance is all about setting up the right policies and frameworks to guide how AI systems are developed, deployed, and used. It’s a way to ensure that AI is beneficial, safe, and aligned with societal values.

| Component | Description |

| Ethics | Ensure that AI is developed following moral principles and does not cause harm. |

| Transparency | Making AI systems and their decision-making processes clear and understandable. |

| Accountability | Assigning responsibility for the actions and results of AI systems. |

| Risk Management | Identifying and mitigating any potential risks associated with AI technologies. |

| Compliance | Following laws, regulations, and standards related to AI. |

These components work together to ensure that AI systems operate in a beneficial and trustworthy way. AI governance isn’t just about controlling AI but guiding its growth to ensure it serves the greater good.

Why Is AI Governance So Important?

AI has enormous potential to solve complex problems, but it can create new challenges without proper oversight.

- Ensuring Accountability: AI governance clarifies who is responsible if something goes wrong with an AI system, whether it’s a biased decision or a malfunction.

- Mitigating Risks: AI systems can be prone to biases, security issues, or errors. Good governance helps identify and manage these risks before they cause harm.

- Supporting Innovation: Clear guidelines and rules give companies and developers the confidence to innovate, knowing that their work aligns with ethical and legal standards.

- Building Public Trust: People are more likely to accept and use AI technologies when they are transparent and accountable and when safeguards protect them from harm.

With AI governance, we can avoid creating systems that may unintentionally cause harm, be biased, or fail to meet public expectations of fairness and safety.

Common Challenges in AI Governance

Managing AI systems has its challenges. Organizations face several challenges when trying to establish and maintain effective AI governance.

| Challenge | Description |

| Bias and Fairness | Ensuring AI decisions are not biased and are fair for everyone. |

| Transparency | Making sure non-experts can understand complex AI systems. |

| Data Privacy | Protecting the privacy of individuals whose data is used to train AI models. |

| Changing Regulations | Keeping up with the evolving legal frameworks around AI in different regions. |

| Global Coordination | Managing AI governance across borders, where laws and cultural views may differ. |

Each of these challenges requires careful consideration and thoughtful solutions. For example, ensuring fairness in AI means having diverse teams of developers, using unbiased datasets, and regularly auditing AI systems for potential biases.

Building a Strong AI Governance Framework

Creating a comprehensive AI governance strategy requires several steps and ongoing efforts. While each organization may tailor its governance approach to fit its unique needs, certain best practices can help guide the way.

Establish Clear Ethical Guidelines

One of the foundational steps in AI governance is to define ethical guidelines. These principles outline the company’s values and goals for using AI. Typically, these cover fairness, transparency, privacy, and safety.

Ensure Robust Data Governance

AI systems rely heavily on data, and managing that data responsibly is critical. Data governance refers to the policies and practices that ensure data is high-quality, secure, and used ethically. This includes things like protecting personal data and making sure the datasets used for AI are representative and free of bias.

Foster Transparency and Explainability

Many AI systems, especially advanced ones like deep learning models, can be difficult to understand. To build trust, it’s important that AI systems can be explained in simple terms. This means developing AI models that are effective and understandable to non-experts.

Regular Monitoring and Auditing

AI systems should be supported once they’re deployed. Regular monitoring and auditing help ensure they function as intended and have not developed biases or errors over time.

Diverse and Inclusive Teams

AI systems are only as good as the people who create them. When developing AI systems, diverse teams are more likely to consider a wider range of factors and potential risks, reducing the likelihood of unintended biases.

AI Regulations Around the World

As AI becomes more prominent, governments and regulatory bodies are working to create laws and guidelines for its responsible use. Different regions have different approaches, which organizations must consider when building AI systems.

| Region | Key AI Regulations |

| European Union | Proposed AI Act, a broad framework classifying AI systems by risk categories. |

| United States | Sector-specific guidelines are needed, but comprehensive federal AI law is still needed. |

| China | Strong government oversight with a focus on national security. |

| Japan | Guidelines focused on balancing innovation and societal values. |

These regulations constantly evolve, and companies must stay updated to ensure compliance. For example, the European Union’s AI Act categorizes AI systems into risk categories. It imposes stricter rules on higher-risk systems, such as those used in healthcare or policing.

The Benefits of AI Governance

While building an AI governance framework can be challenging, the benefits are significant.

- Reduced Risk: By addressing potential risks upfront, organizations can prevent harm caused by AI systems, such as biased decisions or privacy violations.

- Increased Trust: Transparent and accountable AI systems help gain public trust, making people more comfortable using AI.

- Regulatory Compliance: A well-designed governance framework ensures that AI systems comply with local and international regulations.

- Fostering Innovation: With clear rules, companies can confidently innovate, knowing that their AI systems are designed responsibly.

- Better Decision-Making: Governance ensures that AI systems make more reliable, fair, and accurate decisions, improving industry outcomes.

Frequently Asked Questions about AI governance

Q: What is AI governance, and why is it necessary?

A: AI governance refers to the rules, policies, and guidelines that help ensure AI systems are developed and used responsibly. It’s necessary to prevent harmful outcomes, such as bias, unfairness, or privacy breaches.

Q: What are the key components of AI governance?

A: The key components include ethics, transparency, accountability, risk management, and regulation compliance. These elements help ensure that AI systems are safe, fair, and beneficial.

Q: How can organizations implement effective AI governance?

A: Organizations can implement AI governance by establishing clear ethical guidelines, ensuring transparency in AI decision-making, regularly auditing AI systems, and complying with regulations. It’s also important to build diverse teams that consider various perspectives when developing AI systems.

Q: What are the biggest challenges in AI governance?

A: The main challenges include managing bias, ensuring data privacy, maintaining transparency, and keeping up with changing regulations. Each of these requires careful planning and ongoing attention.

Q: How do AI regulations differ around the world?

A: AI regulations vary by region. For example, the European Union is developing the AI Act, a comprehensive framework, while the United States relies more on sector-specific guidelines. In contrast, China focuses on strong government oversight, and Japan balances innovation with societal values.

Wrapping up with AI governance

AI governance is more than just a set of rules—it’s the foundation for ensuring that AI systems are used responsibly, ethically, and safely. As artificial intelligence continues to evolve and become more integrated into our lives, the importance of governance will only grow. By establishing clear frameworks and following best practices, we can harness the power of AI while minimizing risks, building trust, and fostering innovation.